Companies are steadily realizing the need to be more agile and enhance their situational awareness – not only within their own operations but also in relation to their customers and the broader business ecosystem. Situational awareness involves the capacity for both individuals and systems to comprehend the present state of affairs and swiftly react or adapt when necessary.

Business leaders may understand all too well that scalability issues during high-demand periods can lead to a business loss. They might also comprehend the potential lost opportunities due to slow response times or the threat posed by digitally savvy newcomers who pair artificial intelligence (AI) with real-time data to introduce innovative services.

Furthermore, businesses recognize that stellar customer service involves keeping customers informed about status changes impacting their orders or service execution. Companies aiming to address these problems are starting to see that promptly identifying a problem at its source gives them a financial upper hand. They are also acknowledging the competitive advantage of shorter cycle times.

Nowadays, shortening cycle times, operating with greater resilience, and systematically becoming more customer-aware requires an organization to adopt a different kind of information flow centered around events. An event refers to a change in state. For instance, flipping a light switch from off to on, or pressing the pause button and then resuming play in streaming services. In the context of applications, a state change could be a new order, a change to an existing order, or an order cancellation.

While events themselves aren’t a new concept, the ways in which events are gathered, spread, and used can transform an organization from being slow in capturing, understanding, and responding to challenges and opportunities to becoming more predictive and proactive in its operations.

The Importance and Accessibility of Event-Driven Design

The concept of asynchronous event-driven design and event-driven architecture dates back to the late 1980s with the advent of digital messaging technology. This technology created data events and published them for systems interested in receiving such events, referred to as “publish and subscribe” (PubSub).

However, the adoption of PubSub was limited due to the high cost of building and maintaining these ultra–low latency systems. The past decade has seen a reconsideration of the use and value of events due to several factors, including the emergence of new types of ephemeral data that were event-based, the valuable atomic-level data contained in the event logs of databases, and the ability to collect events from various distributed sources.

Addressing the Issue of Harnessing Events at Varying Speeds

The technology supporting PubSub was too swift for many use cases. Businesses were keen to speed up their processes and analytics capabilities, but ‘fast’ is a relative term. For example, collecting data hourly is quicker than daily, but sub-second collection could be too rapid.

Apache Kafka, an early open-source project, resolved this issue by collecting log data events at speeds determined by the project team. This approach expanded the usage of events, underscored their importance, and made it easier for organizations to use event data at different speeds, giving them time to gradually adapt their systems to operate at higher velocities. As a result, PubSub and event-driven architecture have gained wider adoption.

Rise of Event Brokers and Event Meshes

The technology that initially underpinned PubSub was message-oriented middleware that temporarily stored messages in queues. However, the advent of new forms of PubSub technology, including open-source offerings like Apache Kafka, Apache Pulsar, and Redis, as well as modern commercial options, has altered the category to facilitate event data storage or caching, thereby eliminating the need for queuing and making data permanently available.

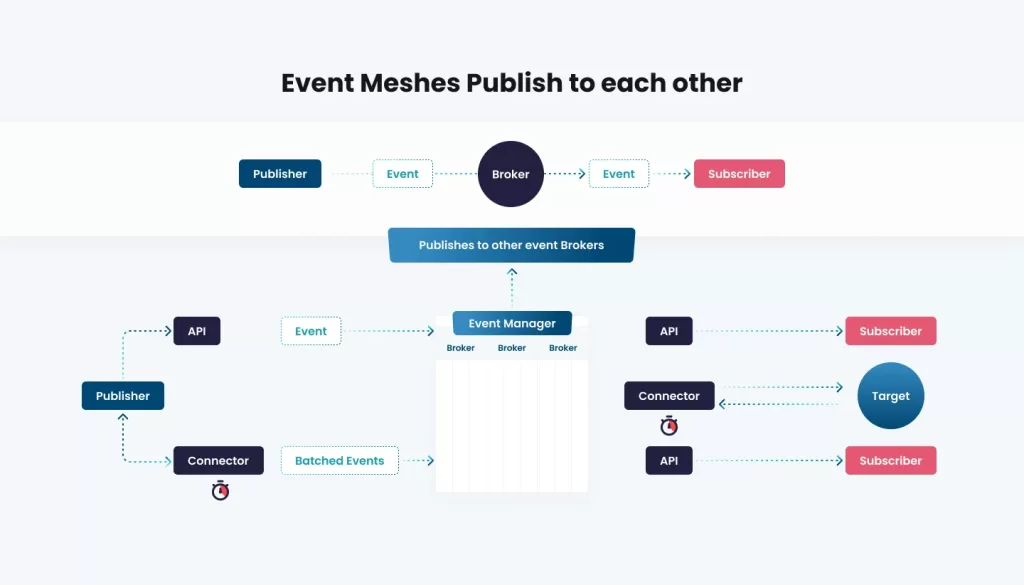

This led to the elevation of the term “event”, rebranding the category as “event brokers”. This category now also caters to all types of deployment models, such as software, Software as a Service (SaaS), and private cloud-hosted event broker software. As shown in Figure 1 (figure not provided), event brokers also assume the role of event producers, as they can subscribe to the topics of other event brokers, resulting in an array of different event brokers and resilience among brokers.

As depicted in Figure 1, the event broker (Broker 1) at the bottom of the illustration is an event manager that supports varying speeds. Conversely, the event broker at the top (Broker 2) prioritizes the high-speed delivery of events. These events might either pass through Broker 2 or be cached by it.

All these technologies guarantee the reliable delivery of events and incorporate callback functions to acknowledge event receipt, as well as a retry mechanism when an acknowledgment is not received. Measures are taken to ensure that the events delivered aren’t duplicates.

Formation of Event Brokers and Event Meshes

When Broker 1 interacts with Broker 2, an event mesh is created. Event meshes play a pivotal role in facilitating event delivery across disparate systems. Different broker participants within a mesh may undertake distinct roles. For instance, a broker might be included for capacity expansion, another to meet low-latency demands, while yet another might filter for specific event types like an order.

An order might be captured by a deployment of Apache Kafka as part of a larger collection, and another broker within the mesh might subscribe to these orders to disseminate them to all applications tuned into that event broker for new orders. Meshes support both parallel and sequential processing.

Integration of Event Brokers and iPaaS

An iPaaS usually comprises APIs, API management, and connectors to databases. It supports sequential orchestration where a transaction can be processed across different applications involved with the processing. An iPaaS also maintains a library, or catalog, of pre-built APIs and connectors that can be brought into the development environment and configured. Since an iPaaS supports integration, mapping, and transformations are facilitated to ensure source data is converted to the target data structure.

REST APIs are a crucial asset in an iPaaS, supporting both request-style commands, such as GET, and push-style commands, such as POST. Push style implies an API supports the delivery of an event, often used to initiate an action. REST APIs facilitate one-to-one interactions. AsyncAPI is an emerging specification for defining an API that supports one-to-many asynchronous interactions. In essence, AsyncAPI caters to event producers and event subscribers, much like an event broker.

AsyncAPI offers a mechanism for packaging PubSub into a callable service. However, while event brokers excel at managing PubSub, customers are likely to prefer utilizing their iPaaS catalog to call the PubSub service.

Becoming Event-Driven Doesn’t Imply Bidding Farewell to APIs and Connectors

APIs are fundamental in integrating data from events into the organization, encompassing both REST-based APIs that utilize request/response patterns and the emerging AsyncAPI protocol that more inherently supports PubSub patterns commonly used by events. APIs are also vital in their own right for automatically initiating subsequent actions in processes, including data pushing or pulling from external sources. Additionally, data on demand, web applications, data/services monetization, and participation in marketplaces will continue to depend on APIs as a front end to events.

An enterprise iPaaS is vital to ensuring all these technologies function in tandem. It ensures events and the consequent data are processed accurately, making integrations more fault-tolerant with less bandwidth demand. The iPaaS system is also crucial in maintaining consistent mapping and proper data transformation when syncing data across the enterprise, including data from event streams. The integration platform must operate smoothly with event brokers and/or event meshes to respond to events, coordinate subsequent data updates across systems, and handle any exceptions in processing.

Advantages

Companies that incorporate event-driven automation into their integration array unlock several key technical and business benefits that provide new insights and enhance data reliability across the company.

Business Advantages

» Assimilate new data. By accessing untapped data streams, either from internal or external sources, companies can enhance organizational insights, offering a more comprehensive view. When data streams are integrated effectively and promptly, it results in quicker and improved business decisions by employees across the company.

» Enhance response time. Real-time processing of business events simplifies the task of responding more quickly with every customer, employee, and partner interaction in two ways. Firstly, it enables wider automation, minimizing wait times and manual interventions between process steps. Secondly, it ensures responses are always driven by the most current data, regardless of the application in use.

Technical Advantages

» Integrations become decoupled. Integrations are subdivided into smaller components, making it easier to reuse components in other integration patterns and applications. This reduces the overall administrative load on IT to manage point-to-point integrations, creating a more flexible architecture.

» Enhanced analytics become feasible. Companies with multiple SaaS solutions from various providers can use events as part of their business process integration/orchestration as well as to consolidate data changes in a central data store for analytics, facilitating a convergence of process and data integration specifically with SaaS applications.

Current Scenario and Insights

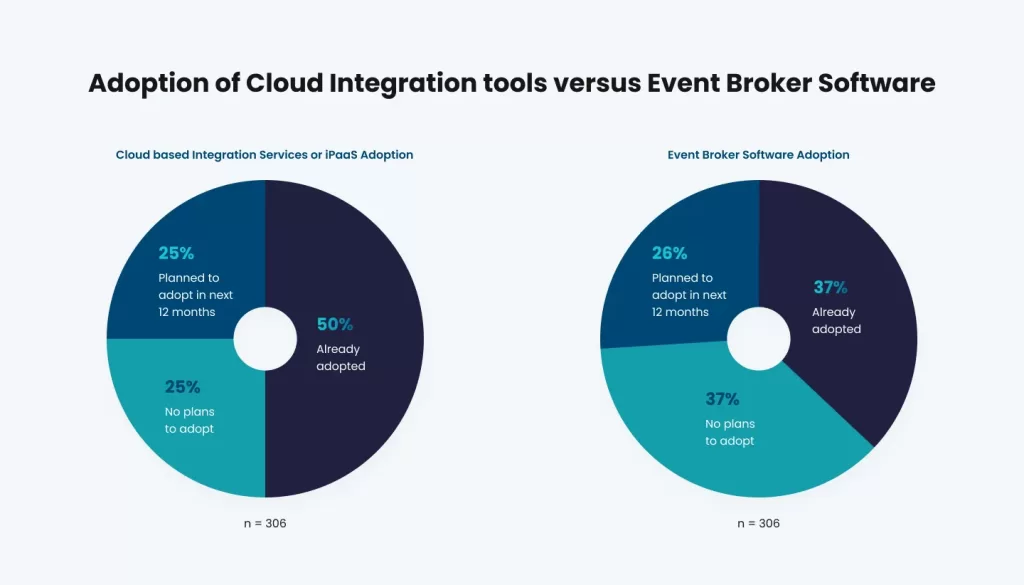

Integration tools based on cloud are currently more broadly accepted than event brokering tools (as per Figure 2). This could suggest that organizations might not fully comprehend how events can be integrated into an overarching integration framework to better support new patterns and meet business requirements.

Moreover, almost 75% of companies that have adopted event broker tools did so over a year ago, according to IDC research, and only about half of these have gone beyond the initial use case. This pattern might suggest an uncoordinated integration strategy, potentially curtailing the benefits that could be derived from event-driven automation with integration.

SAP Integration Suite and Its Event Mesh Capabilities

The SAP Integration Suite now includes an advanced event mesh as a new service capability within its Business Technology Platform (BTP). This new feature enhances the existing Integration Suite by supporting emerging integration patterns that disseminate information across the enterprise in a scalable, resilient, and real-time manner, thereby empowering businesses with the latest information. It includes added cross-cloud, on-premise, and edge deployments of event brokers, geared to support complex, hybrid infrastructures.

Features like the AsyncAPI, transaction and replay support, along with the visualization of decentralized event streaming make the deployment and management of event stream processing more convenient. SAP’s event mesh capabilities are designed to assist organizations in achieving seamless business process automation, speeding up the connectivity of business systems, and fast-tracking their integration modernization journey.

SAP BTP and Its Event Mesh Capabilities

SAP Business Technology Platform’s (BTP) event mesh is a novel service feature that enhances the capabilities of SAP’s Integration Suite. It’s designed to cater to emerging integration patterns that distribute information across an enterprise in a real-time, scalable, and resilient manner. This ensures businesses can operate based on the most current and accurate data.

SAP BTP’s event mesh broadens the horizon of deployment models, offering additional cross-cloud, on-premise, and edge deployments of event brokers. This is particularly beneficial for complex, hybrid infrastructures that require a flexible and resilient system to manage their data flow.

Key Features of SAP BTP’s Event Mesh:

1. AsyncAPI Support: This feature makes deploying and managing event stream processing easier. AsyncAPI is an open specification for creating an asynchronous API, essentially supporting one-to-one and one-to-many interactions.

2. Transaction and Replay Support: These are crucial for managing data flow and ensuring no data is lost or duplicated during transmission. The replay support function allows for reprocessing of events, which can be crucial in debugging or dealing with system errors.

3. Visualization of Decentralized Event Streaming: This feature provides a graphical representation of the event data flow, making it easier for developers to understand and manage the entire process.The overarching aim of SAP BTP’s event mesh is to streamline business process automation and hasten the connectivity of business systems.

This aligns with the ongoing trend towards event-driven design and the growing need for real-time data processing. The event mesh capabilities significantly expedite the integration modernization journey, equipping organizations to handle the increasing volume and velocity of data.

However, it’s important to remember that successful implementation requires developers to update their skills, as designing event-driven automation differs from conventional API utilization and stateful web application development.

Challenges

A primary hurdle associated with the use of event mesh and brokering is the limited developer experience in designing event-driven automation. This can decelerate the delivery speed and inhibit the realization of benefits. While developers are skilled in using APIs and developing stateful web applications, creating event-based and stateless automation demands updated skills.

Conclusion

Organizations aiming to modernize their integration architecture must closely examine how event-driven automation can bolster the speed and reliability of integration and better equip the business for a future that demands faster and more responsive operations. Enterprises must ensure that any deployed integration tools also encompass this capability to future-proof their technology investment more effectively.